First, as we move forward, please keep Kryder’s Law in mind (See LM1 Emergent Technology sub-menu)

This menu and sub-menu area contains:

1. Lecture Recording of the text material as presented in class (i.e. textbook material + additional content) embedded below

2. RAID presentation (still under-construction) in the submenu. You are responsible for the concepts of striping, mirroring and MTTF as presented in the video but not the individual RAID levels beyond RAID levels 0 & 1.

3. Forensics Lecture Recording located in the OS submenu and brief intro also 2nd Lecture Recording (Pt 2)

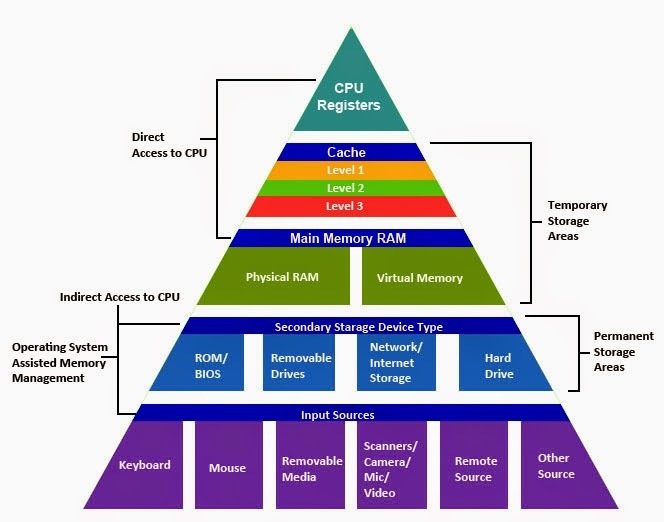

Memory/Storage Hierarchy

Recall a computer architecture not only defines the components but their relationships and it is necessary to understand the Memory Hierarchy that categorizes memory/storage according to their access time, complexity and expense: http://en.wikipedia.org/wiki/Memory_hierarchy.

In the following image, the peak memory in the pyramid is the fastest but also the most expensive (incredibly expensive), and as you proceed lower in the pyramid, access times increase but the cost goes down. With this basis modern computers strive to blend or balance speed and cost/affordability.

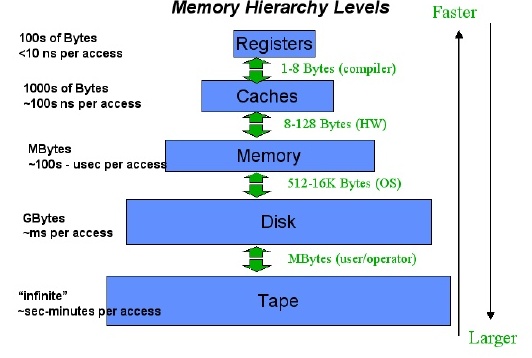

Now let’s look at the memory hierarchy access times and the effect the access times have on computation.

Look at the difference between the CPU’s register speed measured in nanoseconds and our hard drives measured in milliseconds. The difference between a nanosecond and millisecond is esoteric to us but what if we treated the CPU’s nanosecond (.000000001 of second) as a second for a frame of reference since we understand the length of time a second takes. A millisecond is .001 of a second so the difference between a nanosecond and millisecond is 1 million. There are 60 seconds in a minute so 1,000,000 / 60 = 16666 minutes. There are 60 minutes in an hour so 16666/60 =277 hours. There are 24 hours in a day so 277/24 = 11.5 days. Putting this in a temporal context we can understand, if the CPU operated with a 1 second clock cycle, the CPU would wait many days (11.5 in example) for a piece of data to be delivered from storage.

Lecture Recording Below but please continue to read the material on this page

Memory/Storage Hierarchy

The Memory or Storage Hierarchy is located here if you need a refresher: http://en.wikipedia.org/wiki/Memory_hierarchy.

Magnetic Disks

First please read the following on HDD: http://en.wikipedia.org/wiki/Hard_disk_drive

With respect to magnetic disks please recall that instructions and data must be in memory to be processed and the HDD suffers from mechanical (access) and transfer latencies which render it orders of magnitude slower than memory and the CPU (recall my memory hierarchy computation where I treat a computer clock cycle as 1 second which humans are more familiar with and with this basis it takes 11.5 days to get data from HDD storage to the CPU).

The smallest addressable unit is the sector however sectors are more often grouped into clusters in powers of 2. Clusters are typically at least 2048 bytes although smaller USB drives can be formatted with smaller clusters (please see the link below for a description of tracks, sectors and clusters). This means that if the computer needs to read in or modify a single Byte on the disk, the computer must transfer the entire sector/cluster.

http://en.wikipedia.org/wiki/Disk_sector

Now putting this all together and recalling the memory/storage hierarchy and its categorization, we understand why having data and programs in memory speeds execution and why increasing memory size often results in significant performance improvements.

Solid State Drives (SSD)

SSDs are both the present and the future. It is important to note that flash memory can be based on NAND or NOR technology (NAND & NOR logic coming in Programming Languages).

NAND is less expensive than NOR based SSDs, block accessible similar to HDs and is the basis of SSD storage devices => Fast erase, write and very good read performance.

NOR is expensive, byte addressable (in accord with RAM) and therefore executable and is rapidly replacing EEPROM => fast read performance but slower write performance. Please see here: http://en.wikipedia.org/wiki/Ssd

A recent emergence are hybrid drives (what Apple calls “the fusion drive”) which blend magnetic and SSD drives to obtain fiscally reasonable performance improvements. Apple’s Fusion Drive is different than the text’s presentation of an integral Hybrid Drive (Magnetic HD with SSD Cache) as the Fusion Drive has separate HD and SSD and OSX manages their complimentary functionality. OSX will track user computing behavior and migrate often used apps to the SSD for quicker access.

Wikipedia Hybrid Drives: http://en.wikipedia.org/wiki/Fusion_Drive

Apple Fusion Drive: http://www.zdnet.com/apples-fusion-drive-hybrid-done-right-7000006248/

Error Detection – See Parity Bit for an introduction on embedding error detection in symbolic coding.

Cloud Storage

Cloud Storage presents many cost effective opportunities and concommittant risks (see 2014 Apple iCloud celebrity data breach among other concerns). We know about free public cloud storage offered by box.net, dropbox and Google (i.e. Gdrive). In class I presented that 2 separate Chinese companies are offering 1 TB and 10 TB of free storage respectfully (search storage tag in right panel). If companies are offering free storage – What is their business model and what do they get out of it? To answer this question recall from my presentation, if something is free, you are the product and please keep big data/machine learning in mind when assessing.

Future of Storage

Now above I stated that SSDs are our immediate future but we also accept that we are living through emergent and disruptive change so consider that IBM has shattered Kryder’s law storing 1 bit on 12 atoms and Harvard has recently stored 70 billion books using DNA. Harvard scientists predict that “The total world’s information, which is 1.8 zettabytes, [could be stored] in about four grams of DNA,”. You can read more as these developments in the Emergent Topics Blog with tag – Storage.

Lastly from an applied perspective, please consider Big Data where the typical data set is over 1 TB and consider that data must be in memory to be processed.

Additional Topics/Resources

If nothing else please become familiar with backup strategies as data/information resiliency, availability and regulatory compliance is paramount in today’s environment. http://en.wikipedia.org/wiki/Back_up

- Data Backup Guide.pdf (1.98 Mb)

- Data Storage - 7 tips.pdf (73.28 Kb)

- Energy and Space Efficient Storage.pdf (264.4 Kb)

- Hidden Costs of Storage.pdf (288.5 Kb)

- Holographic Firm Claims Data Storage Density Record.doc (31 Kb)

- Parity bit.pdf (58.1 Kb)

- Smarter Storage Environment - Managing Data Growth and Controlling Costs.pdf (1.68 Mb)